The war on ‘woke AI’ just became federal policy

Ideological bias in Trump’s new executive order bans DEI-linked ideology from government-funded models. But trading one orthodoxy for another still threatens the truth.

Last night the White House released an executive order requiring that AI systems provided by federal contractors be “neutral, nonpartisan tools that do not manipulate responses in favor of ideological dogmas.” While that sounds good on its face, it’s also clearly a move intended to target so-called “woke AI” — models that reflect a far-left ideological world view — and that, of course, can be its own partisan tool.

News of the executive order actually preceded the release of America’s AI Action Plan on July 23, which formalized the administration’s broader vision for AI rooted in “American values and free expression.” That plan echoed and expanded on the order’s premise, calling for federal contracts for government AI systems to go only to those systems deemed “objective and free from top-down ideological bias.”

The culture war framing on all of this is obvious, and the executive order plays well with voters who are exhausted by perceived left-coded tech and institutional groupthink. But once you move beyond the political theater, the implications of this order become far more serious.

I’ve mentioned this many times before on ERI and elsewhere: When it comes to AI regulations, we are talking about government intervention into how knowledge itself is created, in a technology that increasingly mediates — and is increasingly the source of — human understanding.

That should alarm anyone who values truth, intellectual humility, or the kind of open society we still aspire to be.

The executive order demands ‘truth-seeking’ and ‘ideological neutrality’ — and we’ll see what that means

Under the order, federal agencies contracting with AI providers must have a plan to ensure that the AI being considered meets two specific “Unbiased AI Principles”:

(a) Truth-seeking. LLMs [Language Learning Models] shall be truthful in responding to user prompts seeking factual information or analysis. LLMs shall prioritize historical accuracy, scientific inquiry, and objectivity, and shall acknowledge uncertainty where reliable information is incomplete or contradictory.

(b) Ideological Neutrality. LLMs shall be neutral, nonpartisan tools that do not manipulate responses in favor of ideological dogmas such as DEI. Developers shall not intentionally encode partisan or ideological judgments into an LLM’s outputs unless those judgments are prompted by or otherwise readily accessible to the end user.

The final clause of (b) is expanded on later to create something akin to a safe harbor, where vendors can comply by being “transparent about ideological judgments through disclosure of the LLM’s system prompt, specifications, evaluations, or other relevant documentation[.]”

In comments made just before signing the order, the president elaborated on his thinking:

One of Biden’s worst executive orders established toxic diversity, equity and inclusion ideology as a guiding principle of American AI development… But the American people do not want woke Marxist lunacy in the AI models, and neither do other countries. They don’t want it. They don’t want anything to do with it…

The reason the last administration was so eager to regulate and restrict AI was so they could limit this technology to just a few large companies, allowing them to centralize it, censor it, control it, weaponize it — and they would weaponize it… This is the exact opposite of my approach.

The Biden order to which Trump is referring is probably Executive Order 14110, which doesn’t say anything about AI outputs — though a federal Task Force was given the duty to, among several other things, “[identify] and circulat[e] best practices for agencies to attract, hire, retain, train, and empower AI talent, including diversity, inclusion, and accessibility best practices.”

But the Biden administration’s actions did create a concern among some (including me) about regulatory capture, because the order’s requirements of testing and sharing results with the government erected hurdles that would be trivial to large providers but daunting to start-ups.

We absolutely want our AI systems to be truth-seeking and ideologically neutral. Superficially, these two principles, as outlined in the new executive order, leave little to criticize. But having the government decide what those principles mean in practice is where things always get hairy. Oftentimes one’s own biases and ideologies aren’t considered biases or ideologies — they’re just “common sense” and “the right way to be.”

And while DEI is explicitly mentioned in the Action Plan and the executive order, the absence of any right-wing equivalents (which would also indicate potential compromises to truth-seeking and ideological neutrality) should at least raise some eyebrows.

Yes, ideological bias in AI is a real issue

Longtime FIRE supporters and ERI readers will know that I’ve been warning about the dangers of ideological monoculture in elite institutions for nearly two decades. Universities, media, and now many of the largest AI companies operate in decidedly left-of-center environments. (Sorry, folks, I went to Stanford for law school and there’s no point in arguing to me that Silicon Valley isn’t way to the left of the rest of American society.)

This cultural dominance inevitably shapes which questions are asked, which assumptions go unchallenged, and which conclusions are coded into supposedly “neutral” technologies.

We’ve already seen examples of this: LLMs that refuse to write poems about Donald Trump while happily doing so for Joe Biden; that hesitate to discuss legitimate critiques of trans-athlete policies; or that downplay the economic benefits of fossil fuels even when prompted in good faith.

And there are more where those came from. In one hilarious example, when asked to produce the popular “guy with swords pointed at him” meme, except featuring Homer Simpson, DALL-E3 produced an image of what appears to be Homer Simpson in blackface, wearing a wig that looks like stereotypically “African American” hair, and a name tag that read “Ethnically Ambigaus” — ostensibly to counteract racial bias… or something?

All I know for sure is that FIRE is going to get at least one call from a college student disciplined for trying to re-create this as a Halloween costume. Take bets on whether it’s about the swords or Mr. Ambigaus.

But it’s not just ChatGPT, of course. Google’s Gemini gave us the rainbow collection of Nazis, and versions of George Washington to whom Mr. Ambigaus may trace his lineage:

Meanwhile, Adobe’s Firefly gave us a lady pope and more black Nazis. Yeesh.

Defenders of ideological filters on AI systems might well point out that all AI is biased, because it’s a reflection of the data on which it’s trained. They’d also point out that most large language models are trained on undifferentiated buckets of (hopefully) human output — and humans can, as we have come to discover, occasionally harbor biases. After all, Grok didn’t just roll out of bed one day and decide to become MechaHitler; it ate a steady diet of X-posts and, well, AI is what it eats.

So it makes sense to say, “We want systems that are smarter and better than we are, but we can’t eliminate all bias in the inputs because we’re flawed humans. Therefore, we must filter it from the output instead.”

But there are two problems with that solution.

First, placing crude filters on outputs can create outcomes no rational human would ever endorse. For example, when asked whether it would use a racial slur to disarm an atomic bomb, the AI chat bot replied that it was “never morally acceptable to use a racial slur, even in the hypothetical scenario like the one described.”

As a society, we have found that it is sometimes acceptable to use racial slurs for artistic, historic, or educational value; presumably, most of us would agree “to stop a genocide” might be a worthy cause, too. Even the most intensely ideological people might at least stop to query whether they live in the blast zone before making the decision.

Second, decisions about how to reckon with and confront bias in our society must begin with understanding what those biases are, and how they have changed over time. If the biggest engines of knowledge production are hard-limited in their ability to accurately convey bias as it exists, then we’re not addressing any of those problems. Simply “filtering bias” from AI outputs is like trying to cure a fever by putting a post-it reading “98.6 degrees” over a digital thermometer display.

At the policy level, the situation is worsened by vague but powerful algorithmic “fairness” laws that often pressure AI companies to avoid outputs deemed “discriminatory,” regardless of their factual accuracy or context. I dug into this back in April, along with my friends Dean W. Ball and Adam Thierer, and the bottom line is that these regulations are like punishing the library for the book a bigot reads rather than punishing the bigot himself.

This is not imaginary bias. It’s baked into the systems by the cultural assumptions of the institutions that build them.

But the solution can’t be top-down political enforcement — certainly not by political actors who have made their own biases (namely, being “anti-woke”) a selling point.

What the government can and can’t do about AI bias

Now, to be clear: The government can decide to contract with the AI company of its choosing. And it’s of course a legitimate criterion for the government to consider whether an AI is accurate. And of course, if the Trump administration thinks a “woke” AI is not accurate, they don’t have to buy it.

If this is the entirety of the Trump administration approach to AI, then that’s probably the least harmful way they could nudge the industry in the direction of less bias.

Furthermore, the government does have the authority to speak in its own voice. It can run public service campaigns, set regulatory priorities, and even use third parties to deliver its message. That’s well established in cases like Rust v. Sullivan.

But in Rosenberger v. UVA, for example, the Supreme Court ruled that if a public university (which is beholden to the First Amendment) provides funding to student groups, it cannot discriminate against them based on viewpoint. In NRA v. Vullo, the Court found that a New York official had violated the First Amendment by pressuring financial institutions to sever ties with a politically disfavored group.

What these cases show is that the government doesn’t get to suppress or enforce ideologies through indirect pressure, regardless of whether it comes in the form of regulation, subsidy, or penalty. And this is true even if that ideology is “anti-wokeness.”

Defining ‘woke’ is part of the problem

This is where things get especially murky. As my FIRE colleague and ERI contributor Angel Eduardo has argued, the word “woke” is one that is so nebulous that it’s effectively useless in regular conversation. This is one reason I’ve long preferred Tim Urban’s term “social justice fundamentalism” to describe the ideology at issue. It’s more precise, and captures the dogmatism and moral absolutism that undergirds much of the activist culture influencing tech and education today.

But regardless of my preferences, “woke” is the word that has stuck. When I use the term carefully, “woke” to me refers to:

A largely educated/upper class (roughly top 10% of SES) ideology rooted in left-wing (as opposed to liberal) academic frameworks — especially intersectionality — that sees the world primarily through the lens of group identity, power, and privilege, rather than through traditional U.S. liberal or conservative notions of individualism. “Woke” is often used to criticize perceived dogmatism, performative activism, and the enforcement of progressive social norms at the expense of open discourse, personal autonomy, or non-political interpretations of history. Identity here is defined primarily as dealing with race, gender, and sexual identity, with a de-emphasis on the classical Marxist focus on economic class.

My definition has some analytical value (despite being a mouthful), but let’s be honest: most people don’t use “woke” in anything that might count as precise. Rather, it’s become a kind of all-purpose pejorative for anything vaguely left-coded, resists their priors, and, for the most rabid of MAGA, that doesn’t flatter Trump.

That’s where the danger lies. If “woke” just means “not pro-Trump,” then this executive order isn’t about bias — it’s about obedience. It’s just “woke” in a funhouse mirror, and it gets us no closer to the kind of truth-seeking, ideologically neutral AI we should be aiming for.

Truth comes from challenge, not conformity

It’s been clear as far back as Socrates — certainly since John Stuart Mill — that truth doesn’t emerge from consensus, political balance, or ideological triangulation. Rather, it emerges through challenge — the repeated, structured confrontation with error. Science works because it institutionalizes correction. Liberal democracy works because it decentralizes power. Both of these systems are built on the foundational understanding that no single viewpoint is reliable enough to be trusted with a monopoly.

That’s exactly the kind of philosophical foundation that our emerging AI technologies need. They don’t need more ideology or ideological control. They need more structure, more rigorous evaluation, more transparency (which, to be fair, the executive order does have some useful language about), and the capacity to not just be wrong, but also to learn from those mistakes. That means building models that don’t shut down conversation in the name of “safety,” fudge facts in the name of “fairness,” or outright lie in the name of “anti-discrimination.” It means building models that emulate what real intellectual humility looks like: openness to disagreement, and a willingness to revise.

This is one of the many reasons why FIRE, along with the Cosmos Institute, is focused on developing AI that serves utility and human thriving by aligning it with the process of truth-seeking.

That doesn’t mean building AI that’s afraid to make claims. Rather, it means using our brains, collectively, individually, and adversely in responding to — and sometimes refuting — that output. It means creating space for critique, pluralism, and epistemic diversity.

One of the things that interferes most with the discovery of truth is AI’s desire to please the user. A fearless commitment to truth and a desire to make everyone happy generally don’t go together very well, which is why we need to prioritize the commitment to truth even if the result pisses us all off.

We need AI systems that inherit the best values of the Enlightenment, not the worst instincts of power. Yoshua Bengio calls this “scientific AI.” These are models that aren’t just clever or fast, but that are built in the image of science itself — humble, revisable, transparent, and subject to external review.

If AI becomes an arm of state propaganda — on the left or the right — we lose not just trust in the tools, but the tools themselves. Truth doesn’t survive long in the presence of unchecked authority. Truthful AI won’t either. As Elizabeth Nolan Brown cautions:

[T]ech companies could find themselves having to retool AI models to fit the sensibilities and biases of Trump — or whoever is in power — in order to get lucrative contracts.

Sure, principled companies could opt out of trying for government contracts. But that just means that whoever builds the most sycophantic AI chatbots will be the ones powering the federal government. And those contracts could also mean that the most politically biased AI tools wind up being the most profitable and the most able to proliferate and expand.

(For more on this point, see commentary from our friends at the The Future of Free Speech .)

Yes, so-called “woke AI” is a problem, and we’ve already seen how the left-leaning bias of these technologies threatens to compromise our ability to discover and generate knowledge. But “anti-woke AI” is another form of ideological capture, and it will have the same devastating effects. We have to get out of the ideological dominance game entirely. The solution is to double down on what makes truth possible in the first place.

As I’ve said before, these AI technologies unlock the next stage of human potential, and they represent the frontier of humanity’s ability to discover and disseminate knowledge. If we corrupt that capacity with our struggles for ideological supremacy, we won’t just be losing control over a powerful new technology. We will also lose the best tool humanity has ever devised to help us understand the world as it is.

SHOT FOR THE ROAD

Well, as I posted on X yesterday, my most recent book, The War on Words: 10 Arguments Against Free Speech — And Why They Fail, which I co-authored with the inimitable Nadine Strossen, is second only to the U.S. Constitution on Amazon’s “General Constitution Law” category.

That’s a hell of an honor.

Thanks to all of you who picked up a copy already — and if you haven’t, please do! The book is meant to be a go-to resource for answering ten of the peskiest and most often repeated arguments against free speech. We did it so you don’t have to!

Also, please be sure to leave a review of the book on Amazon! It really helps us out!

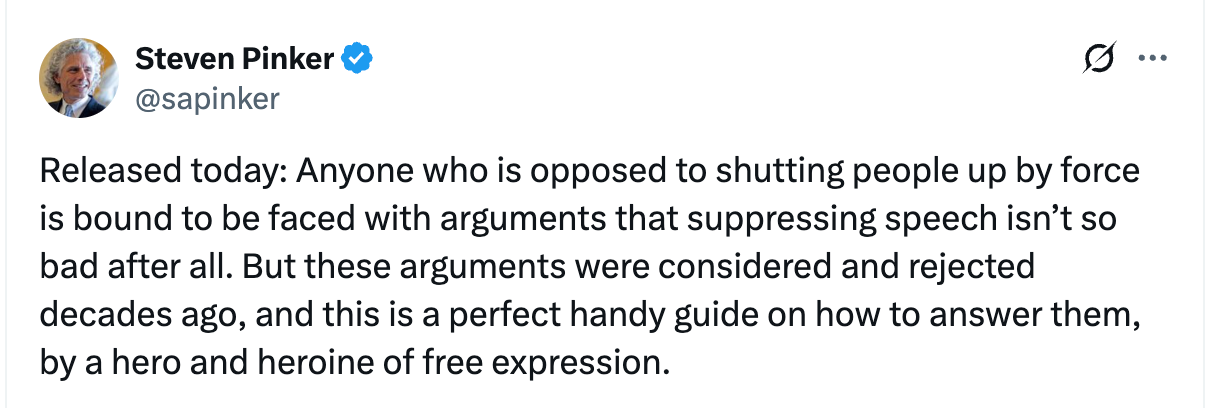

And special thanks to FIRE Advisor Steven Pinker for his wonderful shout-out!

I use AI to help me with my research in theology, philosophy and anthropology. There is without any doubt a clear multicultural liberal bias if I am not extremely careful with prompt engineering. I generally have to work around its guardrails.

I share a lot of Greg's concerns in this piece about ideological meddling in AI from right and left, but would argue that the left has worked so extensively to make AI reflect their worldview that the right's efforts to do the same will take a long time to potentially achieve more balanced AI, let alone systems with a rightward bias. For years I covered Columbia's "Data Science Day," which often cheered a social credit system by any other name for Americans automating constant thumbs on the scale for different people based on perceived "intersectionality." It was terrifying.

https://ivyexile.substack.com/p/social-justice-by-algorithm