METACOMMENTARY: Why Meta’s move to community notes is good

Contrary to some media hyperventilation, there was a lot to like in Zuckerberg’s announcement on Tuesday

On Tuesday, Mark Zuckerberg announced that Meta* would make a number of changes to its content moderation policies. Those changes were, in fact, a long time coming, and are good both for the platform and the growth of a free speech culture.

One of those changes that we think should've received a great deal more attention was to call on the incoming president to help reverse the international tilt toward censorship. We’ve been concerned about that since the beginning of ERI, and that’s why we include an international news section in every Weekend Update, curated by FIRE’s Senior Scholar for Global Expression, Sarah McLaughlin (who also writes the essential Free Speech Dispatch for FIRE). It’s clear from his announcement that Zuckerberg has been seeing the same things we have.

For example, Zuckerberg mentioned Europe’s “ever-increasing number of laws institutionalizing censorship.” Some of what he’s talking about:

In December, Ireland’s media regulator ruled that because Meta was “exposed to terrorist content,” it could “supervise and assess” mitigation efforts, and issue fines if they were insufficient.

In November, Telegraph columnist Allison Pearson was visited by police investigating a “non-crime hate incident” (NCHI) over a year-old post on X, but police would not tell her which post it was.

In October, counter-terrorism police officers raided the London home of anti-Zionist journalist Asa Winstanley and seized his electronic devices; an officer told Winstanley the raid was linked to his social media posts.

In August, the UK Government warned its citizens on X to “think before you post.”

As Sarah put it, “[t]he UK’s relationship with free expression is currently in a free fall.” And the rest of Europe isn’t far behind. A favorite from the last year, though not computer-enabled, was when German police forbade pro-Palestinian protesters from speaking Irish, because their speech could not be monitored for hate speech.

Zuckerberg also mentioned that “Latin American countries have secret courts that can order companies to quietly take things down.” Probably, though they also have not-so-secret courts that can order companies to take things down. X spent 40 days blocked in Brazil for refusing to comply with an order to suspend far-right accounts.

Another change is to remove restrictions on topics like immigration and gender that, in Zuckerberg’s words, are “out of touch with mainstream discourse.” He continued, “What started as a movement to be more inclusive has increasingly been used to shut down opinions and shut out people with different ideas, and it’s gone too far.” That mirrors exactly what we have seen on campus. Debates over immigration and gender ideology dominate in many circles today, and they are both, to say the least, matters of public concern that people should have the opportunity to argue through.

Zuckerberg also announced in his video that Meta would move its Trust and Safety and Content Moderation teams from California to Texas. “As we work to promote free expression, I think that it will help us build trust to do this work in places where there’s less concern about the bias of our teams,” Zuckerberg said.

Some have questioned whether there’s much difference between moving from Palo Alto to, say, Austin. Sure, Austin may have a groupthink problem — but the groupthink in Palo Alto was exquisite even 25 years ago. As we know from the study of group polarization, exposure to people with different points of view can have a moderating effect, and that's one of the reasons why you can expect the groupthink to be worse in Palo Alto, which is a bright blue spot in a bright blue ocean, as compared Austin, which is a light blue spot in a red sea.

However, one of Meta’s changes has particularly roiled media observers: the elimination of fact-checkers in favor of a “community notes” system like the one on X.

On Vox, a story says the move “signals a willingness among tech companies to cater to Trump.” The AP’s headline agrees, calling it the “latest bow to Trump.” CNN’s headline advises that “Calling women ‘household objects’ [is] now permitted on Facebook,” noting in the text that one of the provisions eliminated from Meta’s policies explicitly prohibited such a dehumanizing metaphor. The New York Times jumped headfirst into Poe’s Law territory with its headline: “Meta Says Fact-Checkers Were the Problem. Fact-Checkers Rule That False.” CNN’s Brian Stelter called Meta’s changes a “MAGA makeover.” MSNBC’s Joe Scarborough called the decision "horrific" and suggested Zuckerberg was telling the incoming president, “Yes, yes, sir, overlord, I will now do what you want me to do.” (H/t to TheWrap for catching that!)

Those of us who have watched professors and students getting in trouble on campus for dissenting opinions on practically every hot-button issue in the United States for the last two decades are not the least bit surprised by this revolt against authority. (If you want my best accounts, check out my books: “Unlearning Liberty” from 2012, my booklet “Freedom From Speech” from 2014, my co-authored book with Jonathan Haidt “The Coddling of the American Mind” from 2018, and my most recent book with Rikki Schlott, “The Canceling of the American Mind.” Also, all of the work of FIRE and most of the posts on this Substack.) And watching members of elite media freak out about a system that means nothing more than a move from an unsuccessful top-down verification system to a distributed system can be almost comical. Don't they understand that the entire western system that Jonathan Rauch has dubbed “liberal science” is a distributed system of knowledge creation?

Also, we’d push back against the idea that Meta’s shift here is just about the next president. Mark Zuckerberg has long been concerned with Facebook (or anyone, for that matter) being made the “arbiter of truth.”

In 2016, when some in the media questioned Facebook’s role in the election, Zuckerberg wrote a response cautioning against the idea that Facebook should be deciding who should have a voice:

While some hoaxes can be completely debunked, a greater amount of content, including from mainstream sources, often gets the basic idea right but some details wrong or omitted. An even greater volume of stories express an opinion that many will disagree with and flag as incorrect even when factual. I am confident we can find ways for our community to tell us what content is most meaningful, but I believe we must be extremely cautious about becoming arbiters of truth ourselves.

In May 2020, a time when Trump’s prospects of a political return seemed relatively improbable, Meta declined to censor Trump’s election claims. Zuckerberg said then, “I just believe strongly that Facebook shouldn’t be the arbiter of truth of everything that people say online.” In 2023, Zuckerberg said that the scientific establishment “asked for a bunch of things to be censored that, in retrospect, ended up being more debatable or true. That stuff is really tough, right? It really undermines trust.”

The problem with viewing a move away from fact-checkers as a net negative is that fact-checking didn’t work the way we all wanted it to work. Fact checkers are sometimes wrong, and even when they get it right, the existence of top-down content filtering reduces trust and confidence in all content on a platform. As David Moschella of the Information Technology and Innovation Forum explained, “It’s not that these groups [government, media, scientists, and fact-checkers] can’t be trusted; it’s that they all have their own agendas and can’t be trusted to be right all of the time.”

Fact-checkers have reduced trust in platforms. In our social media report released last summer, our survey found that “61% of Democrats, 62% of independents, and 73% of Republicans don’t trust social media companies to be fair about what can be posted on their platform.” As Greg observed on X, this was entirely predictable, given that fact-checkers, both on and off campus, have rightfully lost the public’s trust.

Viewing a retreat from a system that doesn’t work (and undermines epistemic trust) as a concession to Trump is short-sighted and self-defeating.

The New York Times story quotes fact-checkers making the point that the checkers didn’t choose what Meta would do with their fact-checks, Meta did. To the extent people are looking to assign blame for prior Meta censorship, that might be an interesting conversation. But it doesn’t justify perpetuating the system that censored truthful information and, worse, reduced public confidence in the information contained on social media.

Putting aside COVID and certain laptops, the most recent news story that undermined trust in both the media and government as fact-checkers was probably Joe Biden’s cognitive decline, which a great number of sources worked to tell you was not real — except then later it really was.

Even when fact-checkers weren’t wrong, some had clearly gone too far in a way that revealed an obviously non-neutral point-of-view. We’re thinking here of Snopes fact-checking the Babylon Bee. It’s hard to maintain trust in a system that outsources its fact-checking to a group that reverently debunks claims like AOC repeatedly guessed “free” on “The Price is Right.” (Snopes has since introduced the “labeled satire” rating. The Babylon Bee has returned the favor with a story claiming that Snopes has introduced the “factually inaccurate but morally right” label, for claims that “aren’t technically correct but are on the right side of history.”)

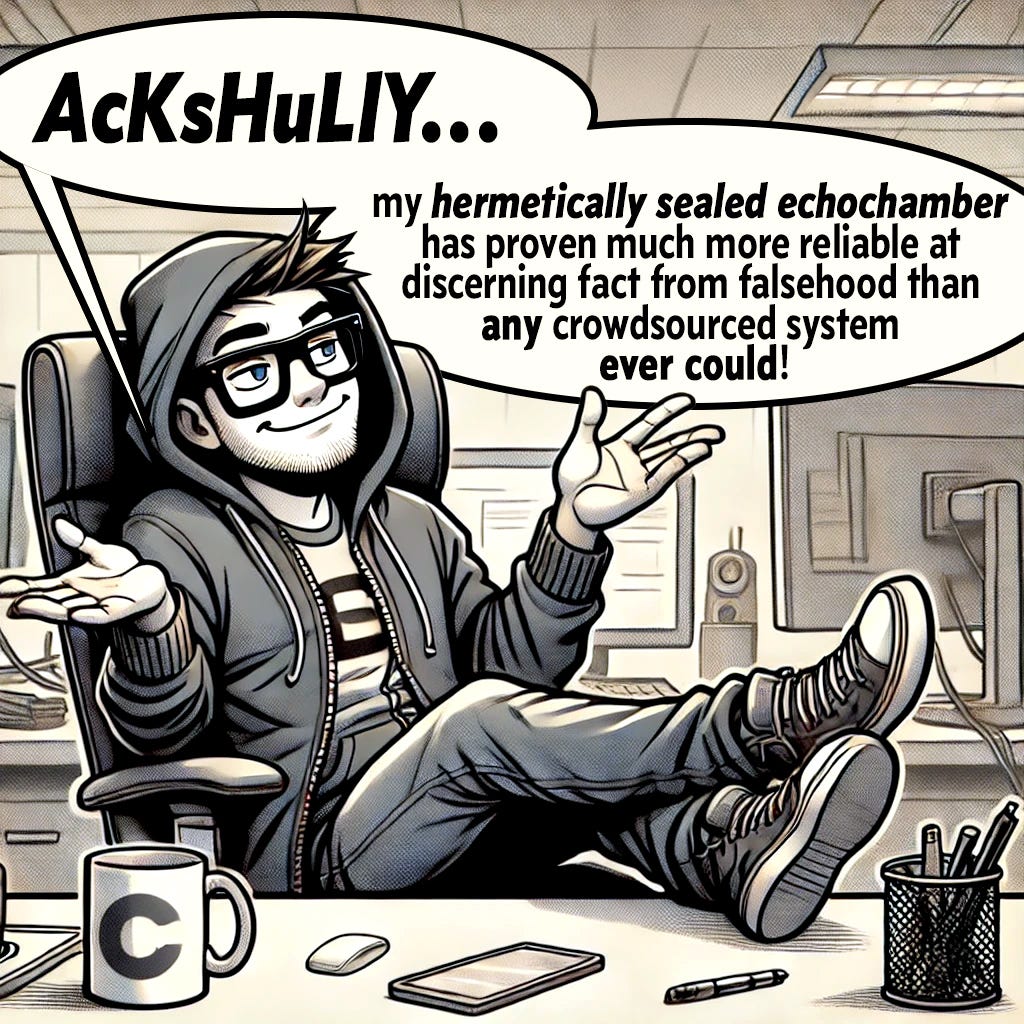

Is X’s community notes system perfect? No. It permits the lie to get halfway around the world, as the saying goes, because it takes time for a community note to rise to the level of being shown on a tweet. But saying a crowdsourced system is imperfect doesn’t mean it isn’t the best system we have available. And its greatest virtue is that it does not ask anyone to sit as the “arbiter of truth.”

Meta’s changes are not the death of speaking from authority; it just means the authority will have to be cited by someone sitting as a co-equal voice in a system that doesn’t censor based on the all-too-frequently imperfect perspective of an insular group — which, like all of us, is subject to its own biases and blind spots. A censor being wrong about something is much, much worse than any of us individually being wrong. Getting rid of that moderation is a step in the right direction, because it means we can at least hear each other out, however wrong we individually might be.

Note: ERI co-conspirator Angel Eduardo realized we needed to call this METACOMMENTARY!

*Meta donates to FIRE

Shot for the road

John Stossel takes a look at the “The Coddling of the American Mind” documentary, now available on Apple, Amazon, Google, and here on Substack!

It can be simultaneously true that FB moving away from fact checkers is overall objectively positive for free speech AND Zuckerberg's political/profit motivations cause him to kowtow to Trump.

The cure for incorrect information isn’t censorship, it’s more information explaining why it was wrong. If you can’t produce convincing evidence that something is wrong, perhaps it isn’t really wrong.